Chapter 5. Sensation and Perception

Introduction

Jessica Motherwell McFarlane

Approximate reading time: 38 minutes

Picture yourself standing on a street in a city. You might notice a lot of things moving, like cars and people busy with their day. You might hear music from someone playing on the street or a car horn far away. You could smell the gas from cars or food from a nearby place selling snacks. And you might feel the hard ground under your shoes.

We use our senses to get important information about what’s around us. This helps us move around safely, find food, look for a place to stay, make friends, and stay away from things that could be dangerous.

This chapter will provide an overview of how sensory information is received and processed by the nervous system and how that affects our conscious experience of the world. We begin by learning the distinction between sensation and perception. Then we consider the physical properties of light and sound stimuli, along with an overview of the basic structure and function of the major sensory systems. We will discuss the historically important Gestalt theory of perception and how it can teach us how we are able to understand visual narratives (comics). Then, we will explore the skin senses of touch, temperature and pain. Next we will consider the senses of balance and proprioception. We will conclude our chapter the often overlooked sense of interoception — the ability to sense about what is happening inside our bodies.

Sensation

What does it mean to sense something? Sensory receptors are specialised neurons that respond to specific types of stimuli. When sensory information is detected by a sensory receptor, sensation has occurred. For example, light that enters the eye causes chemical changes in cells that line the back of the eye. These cells relay messages, in the form of action potentials (as you learned when studying biopsychology), to the central nervous system. The conversion from sensory stimulus energy to action potential is known as transduction.

We used to teach, in error, that humans have only five senses: vision, hearing (audition), smell (olfaction), taste (gustation), and touch (somatosensation). We actually have at least 12 senses, each one providing unique information about our inner and outer realities. Below is a mnemonic and definitions to help you learn about these twelve of our senses:

Very Happy Tigers Snuggle Tiny Pandas Inside Velvet Tents Near Old Elephants

- “Very” stands for Vision: Vision is the capacity to detect and interpret visual information from the environment through light perceived by the eyes.

- “Happy” stands for Hearing: Hearing is the sense that allows perception of sound by detecting vibrations through an organ, such as the ear.

- “Tigers” stands for Taste: Taste is the sense that perceives different flavours in substances, such as sweet, sour, salty, bitter, and umami, through taste buds in the mouth.

- “Snuggle” stands for Smell: Smell, or olfaction, is the ability to detect and identify different odours, which are chemical substances in the air, through receptors in the nose.

- “Tiny” stands for Touch: Touch, or tactile sense, involves perceiving pressure, vibration, temperature, pain, and other sensations on the skin.

- “Pandas” stands for Proprioception: Proprioception is the sense that allows the body to perceive its own position, motion, and equilibrium, even without visual cues.

- “Inside” stands for Interoception: Interoception is the sense of the internal state of the body, which can include feelings of hunger, thirst, digestion, or heart rate.

- “Velvet” stands for Vestibular sense: The vestibular sense involves balance and spatial orientation, maintained by detecting gravitational changes, head movement, and body movement.

- “Tents” stands for Thermoception: Thermoception is the sense of heat and the absence of heat (cold) perceived by the skin.

- “Near” stands for Nociception: Nociception is the sensory nervous system’s response to harmful or potentially harmful stimuli, often resulting in the sensation of pain.

- “Old” stands for Oleogustus: Oleogustus is the unique taste of fat, recognised as a distinct taste alongside sweet, sour, salty, bitter, and umami.

- “Elephants” stands for Equilibrioception: Equilibrioception, often considered a part of the vestibular sense, is the physiological sense that helps prevent animals from falling over when walking or standing still.

What is Psychophysics?

Psychophysics is a part of psychology that looks at how physical things around us, like light and sound, affect what we sense and how we think. Gustav Fechner, a German psychologist, started this field. He was the first to explore how the strength of something we sense, like a bright light or a loud sound, is linked to our ability to notice it (Macmillan & Creelman, 2005).

Fechner and other scientists developed ways to measure how well we can sense things. One key thing they look at is how we notice very faint or weak things. The absolute threshold is the lightest, quietest, or least intense thing we can just barely sense. For example, think about a hearing test where you have to say if you hear a very soft sound or not (Wickens, 2002).

The Challenge of Faint Signals

When signals are very faint, it’s hard to be sure if we’re sensing them or not. Our ears always pick up some background noise, so sometimes we might think we heard something when there was nothing, or we might miss a sound that was there. The job is to figure out if what we’re sensing is just background noise or an actual sound mixed in with the noise. This is where signal detection analysis comes in. It’s a method to figure out how well someone can tell the difference between real signals and just noise (Macmillan & Creelman, 2005; Wickens, 2002).

Understanding Signal Detection Analysis

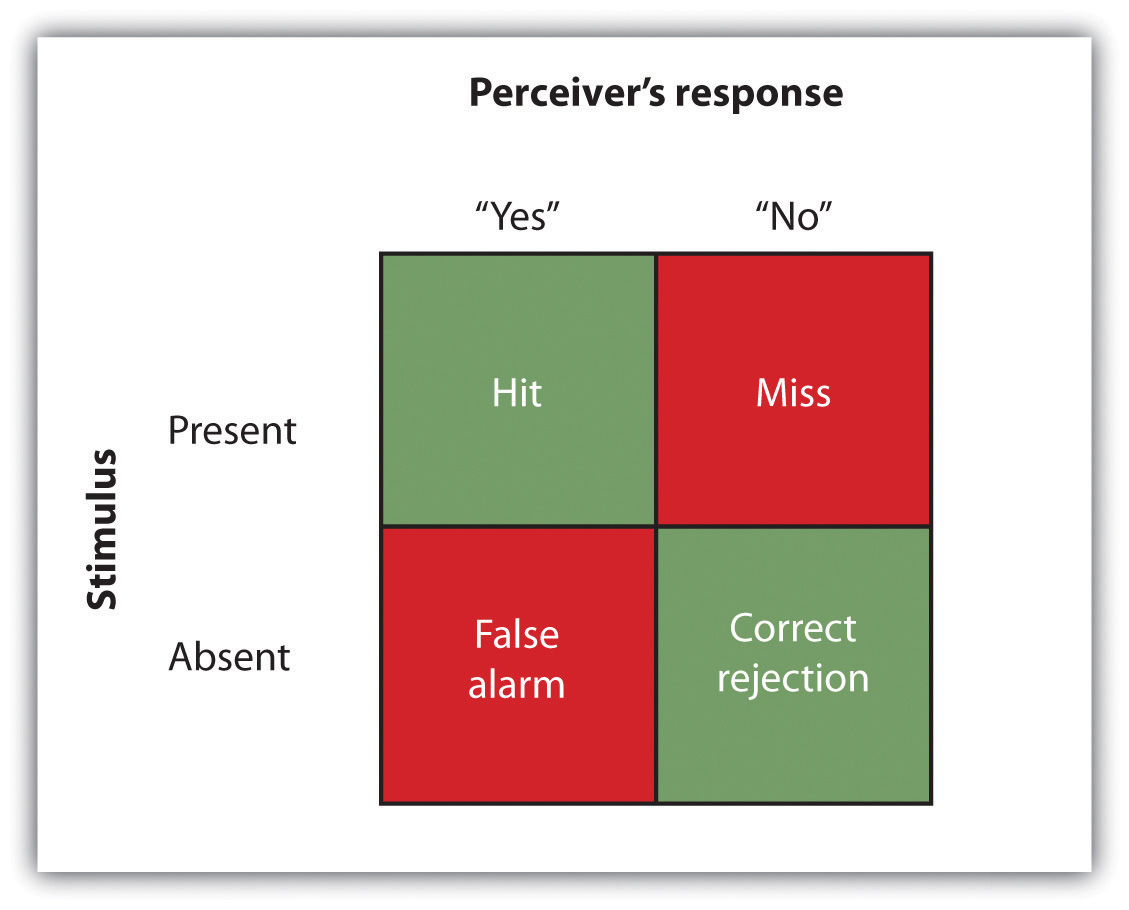

The problem for us is that the very faint signals create uncertainty. Because our ears are constantly sending background information to the brain, you will sometimes think that you heard a sound when none was there, and you will sometimes fail to detect a sound that is there. Your task is to determine whether the neural activity that you are experiencing is due to the background noise alone or is the result of a signal within the noise. The responses that you give on the hearing test can be analysed using signal detection analysis. Signal detection analysis is a technique used to determine the ability of the perceiver to separate true signals from background noise (Macmillan & Creelman, 2005; Wickens, 2002). As you can see in Figure SP.2, “Outcomes of a signal detection analysis,” each judgement trial creates four possible outcomes. A hit occurs when you, as the listener, correctly say “yes” when there is a sound. A false alarm occurs when you respond “yes” to no signal. In the other two cases you respond “no” — either a miss (saying “no” when there was a signal) or a correct rejection (saying “no” when there was in fact no signal).

The analysis of the data from a psychophysics experiment creates two measures. One measure, known as sensitivity, refers to the true ability of the individual to detect the presence or absence of signals. People who have better hearing will have higher sensitivity than will those with poorer hearing. The other measure, response bias, refers to a behavioural tendency to respond “yes” to the trials, which is independent of sensitivity.

Understanding Sensitivity and Response Bias in Psychophysics Experiments

In psychophysics experiments, we focus on two key concepts: sensitivity and response bias. Sensitivity is about how well someone can detect signals. For instance, someone with excellent hearing is more sensitive and can detect sounds better than someone with average hearing. Signal detection analysis separates true signals from background noise and evaluates the ability to detect faint signals. On the other hand, response bias is about a person’s tendency to say “yes, I noticed something” during tests, which is not necessarily linked to their actual sensitivity. In other words, a response bias is the degree of eagerness a person has to find and report a signal whether it is present or not.

Example of Sensitivity in Medical Scenarios: Another application of signal detection occurs when medical technicians study body images for the presence of cancerous tumours. Again, a miss (in which the technician incorrectly determines that there is no tumour) can be very costly, but false alarms (referring patients who do not have tumours to further testing) also have consequences, such as unnecessary exposure to radiation. The ultimate decisions that the technicians make are based on the quality of the signal (clarity of the image), their experience and training (the ability to recognise certain shapes and textures of tumours), and their best guesses about the relative costs of misses versus false alarms.

Example: Response bias in real life – the forest fire warden

Imagine being a seasoned forest fire warden, in charge of monitoring a vast, vulnerable woodland. Your duty revolves around detecting the tiniest signs of a potential fire, such as a hint of smoke in the distance, a lightning strike, or a spark from a neglected campfire. In this scenario, sounding a false alarm about a possible fire may seem less severe than overlooking a small warning sign that could ignite a full-blown forest blaze. Accordingly, you adopt an extremely cautious response bias, alerting your team at the slightest suspicion, even amid uncertainties. This might mean your precision could be low due to recurrent false alarms, but it’s a sacrifice made for the greater goal of preserving the forest. Despite the risk of numerous false alarms, this heightened vigilance underlines the critical role fire wardens play, potentially saving entire forests through their unwavering alertness to the smallest shifts in their surroundings.

Weber’s Law and Just Noticeable Difference

Another important idea is the difference threshold, or just noticeable difference (JND). This is about how small a change in something needs to be before we can notice it. Ernst Weber, a German physiologist, found out that noticing differences depends more on the ratio of the change to the original thing than on the size of the change itself (Weber, 1834/1996). For example, adding a teaspoon of sugar to a cup of coffee that already has a lot of sugar won’t make much difference. But if the coffee had very little sugar to begin with, you’ll notice the extra teaspoon more.

Example: Weber’s Law in daily life

Weber’s Law also applies to everyday things, like playing a video game. If the game is already loud and the volume goes up a little, you might not notice. But if the game is quiet and the volume increases by the same amount, you’ll probably notice the change. So, how much we notice a change depends not just on the change itself, but on how big that change is compared to what we started with.

Perception

What is the difference between sensation and perceptions? You walk into a kitchen and smell the scent of baking naan, the sensation is the scent receptors detecting the odour of yummy, hot naan, but the perception may be “Mmm, this smells like the naan Grandma used to bake when the family gathered for holidays.” In this example, sensation is a physical process, whereas perception is psychological. Then there is the reciprocal back and forth between sensation and perception when the smell of naan makes us think of Grandma’s naan, which reminds us of her hugs, which motivates us to stay in the kitchen longer so we can breathe in more yummy naan smells and linger in the memories we have about the loving times we had with Grandma.

While our sensory receptors are constantly collecting information from the environment, it is ultimately how we interpret that information that affects how we interact with the world. Perception refers to the way sensory information is organised, interpreted, and consciously experienced. Perception involves enviro-bodily, self-in-search, and reciprocal processing. Enviro-bodily (formerly called bottom-up) processing refers to sensory information coming from the environment or our own physical body to our senses. (e.g., Gregory, 1966). Self-in-search (formerly called top-down) processing refers to our knowledge and expectancy inspiring our active search of the environment or our own physical body to find something (Egeth & Yantis, 1997; Fine & Minnery, 2009; Neisser, 1976; Yantis & Egeth, 1999). A reciprocal (an influence that goes back and forth) — process refers to the environment or our body offering up sensory information that then changes our ability to sense more — or less — of the environment or our body.

- Enviro-body processing (also known as “bottom-up”): Imagine that you and some friends are sitting in a crowded restaurant eating lunch and talking. It is very noisy, and you are concentrating on your friend’s face to hear what she is saying. Then the sound of breaking glass and clang of metal pans hitting the floor rings out; the server dropped a large tray of food. Although you were attending to your meal and conversation, that crashing sound would likely get through your attentional filters and capture your attention. You would have no choice but to notice it. That attentional capture would be caused by the sound from the environment: it would be enviro-bodily processing.

- Self-in search processing (also known as “top-down”): Alternatively, self-in-search processes are generally goal directed, slow, deliberate, effortful, and under your control (Fine & Minnery, 2009; Miller & Cohen, 2001; Miller & D’Esposito, 2005). For instance, if you misplaced your keys, how would you look for them? If you had a yellow key fob, you would probably look for yellowness of a certain size in specific locations, such as on the counter, coffee table, and other similar places. You would not look for yellowness on your ceiling fan, because you know keys are not normally lying on top of a ceiling fan. That act of searching for a certain size of yellowness in some locations and not others would be self-in-search — under your control and based on your experience.

- Reciprocal processing: Imagine you’re taking a leisurely stroll through a serene forest. As you walk, you notice the rustling leaves and the gentle breeze brushing against your skin. The environment offers up its calming sights and sounds, shaping your perceptual experience. In return, your newly refreshed attentive presence in the forest allows you to notice more subtle shifts in nature — the chirping of birds and the scent of pine, enriching the environment with your own sensory inputs. This mutual exchange of information between your ever-changing body and a living environment exemplifies reciprocal perception (Smith & Thelen, 2003). This process allows you to grow a different quality of presence in the environment where both you and the surroundings actively contribute to the unfolding perceptual experience, creating a dynamic and interconnected relationship (Witherington, 2005). Eleanor Gibson referred to this form of reciprocal processing as the ecological approach to perception (Gibson, 2000).

How Emotions Affect Our Perceptions

Imagine you’re watching a suspenseful movie and suddenly every sound seems louder, or you’re walking after a scary story and the shadows seem darker. Ever wondered why? Elizabeth Phelps might have an answer for that. She and her colleagues found that our feelings — whether we’re scared, happy, sad, or excited — can change the way we perceive and remember things (Phelps, Ling, & Carrasco, 2006).

Phelps and her team realised that when something makes us feel a certain way (like that jump scare in a horror movie), it’s not just that we remember it better, but it also stands out more at that moment (Phelps, Ling, & Carrasco, 2006). It’s like our emotions put on a pair of magnifying glasses that change the way we see the world.

Since then, other researchers have validated that emotions can influence our perception (e.g., Riggs, Fujioka, Chan, McQuiggan, and Anderson, 2019). For example, some researchers found that when we’re feeling emotions, we pay more attention, especially if the content is negative; think about why we can’t look away from a car crash (Pool, Brosch, Delplanque, & Sander, 2016). Others note that when we’re emotionally charged, our focus gets a super-boost, making everything clearer (Bocanegra & Zeelenberg, 2017). According to Mather and Schoeke (2011), the more emotional an event is, the better it sticks in our memory, kind of like how we can’t forget that one embarrassing moment from high school.

In sum, emotions aren’t just about ‘feels.’ Emotions powerfully shape the way we see and interact with everything around us. Think of it as your emotions giving you a personalised, experiential tour of the world. We will discuss more about this in the chapter on emotion.

Predictive coding in perception

Andy Clark (2013) presents another kind of reciprocal (back and forth) model of the perception based on prediction. This concept, known as predictive coding, suggests that our brains constantly formulate hypotheses or predictions about our environment, and that these hypotheses are continually updated, based on incoming sensory information. This is a significant departure from the traditional, passive model of perception, where the brain is seen as merely reacting to sensory stimuli. Furthermore, Clark integrates this concept with the theory of embodied cognition, which emphasises the role of our physical bodies and the environment in shaping cognitive processes. In essence, cognition isn’t merely a product of internal brain computations but is also influenced by our interactions with the world around us.

Returning to our example of the lost keys but looking through the lens of predictive coding, if you misplaced your keys, you would probably start to predict where you might have left them based on your past behaviours and the layout of your environment. For example, if you typically drop your keys on the kitchen counter when you get home, your brain will predict that this is the most likely location and direct your attention there first. As you search for your keys, your brain constantly updates its predictions based on the incoming sensory information. If you don’t find your keys on the kitchen counter, this discrepancy between your prediction (keys on the counter) and the actual sensory input (no keys on the counter) generates a prediction error.

Your brain uses this prediction error to update its model of the world. It might now predict that the keys are in your coat pocket, if that’s another place you often leave them. You’ll continue this cycle of prediction and error correction until you locate your keys. This is predictive coding at work: your brain makes educated guesses about where your keys are, then refines those guesses based on what you actually find as you search. It’s a dynamic process of hypothesis testing, with your brain continuously updating its predictions to align with the reality of your sensory input.

Sensory adaptation

Even though our perceptions are formed from our sensations, it’s not the case that all sensations lead to perception. Actually, we frequently don’t notice stimuli that remain fairly steady over long periods. This is referred to as sensory adaptation. Imagine you’re at a concert, and you’ve secured a spot right next to the speakers. The music is extremely loud, to the point where it’s nearly deafening. You’re initially worried about whether you’ll be able to endure the concert at such a high volume. However, as the night goes on and you become heavily focused on the performance, the music seems to become less overpowering. The speakers are still blaring and your ears are still picking up the sound, but you’re no longer as aware of the loudness as you were at the start of the concert. This lack of awareness despite the constant loud music is an example of sensory adaptation. It illustrates that while sensation and perception are closely linked, they’re not the same thing.

Did You See the Gorilla?: Divided attention, perceptual inattention, and change inattention

Attention

Attention (the cognitive process of selectively concentrating on one aspect of the environment while ignoring other things) significantly influences the interaction between sensation and perception. As an example, consider a university student sitting in a lecture, absorbed in checking their emails on their phone. The hum of the professor’s voice, the shuffling of feet, and the occasional whisper from classmates all register as background noise that the student’s senses pick up but their attention filters out. Suddenly, the professor mentions something about an “exam” in the next class. This keyword acts like a spark, instantly redirecting the student’s attention. Although the professor’s voice had been a constant stimulus, it’s only now, with their attention refocused, that the student truly perceives what the professor is saying. Attention has the power to shape our perception from the numerous sensations we continuously encounter (Broadbent, 1958).

Divided Attention

Divided attention, also known as multitasking, refers to the cognitive ability to concentrate on two or more tasks at the same time. This kind of attention is critical in our daily lives, enabling us to process different streams of information concurrently (Treisman, 1960). For example, you might be listening to music while preparing a meal or having a conversation while driving.

The effectiveness of divided attention can vary based on the difficulty and familiarity of the tasks. If at least one task is simple and/or well-practiced, divided attention may be effective. For instance, many people can successfully walk (a well-practiced task) while having a conversation (a potentially complex task) (Spelke, Hirst, & Neisser, 1976). Some researchers suggest, however, that when two tasks are complex and/or unfamiliar, they compete for cognitive resources, often leading to decreased performance on one or both tasks (Pashler, 1994).

In today’s digital age, the concept of divided attention is even more relevant, as we frequently switch between different tasks and media. However, this can also lead to a phenomenon known as “cognitive overload“, in which the demands of multitasking exceed our cognitive capacity, resulting in decreased efficiency and productivity (Sweller, 1988). It’s important to note that despite divided attention being commonplace in our lives, multitasking can lead to errors and can be less efficient than focusing on one task at a time, especially for more complex tasks (Salvucci & Taatgen, 2008).

Perceptual Inattention

Perceptual inattention, or what used to be called “inattention blindness” is the failure to perceive a stimulus in the environment. The term “blindness” is specific to vision. We can, however, be inattentive to any incoming sensory stimulus like a bad smell, or noisy kitchen sounds, or a room that is getting too cold. Since the term “blindness” is not comprehensive enough to describe the entire phenomenon, we will use the term perceptual inattentiveness in this textbook.

One of the most interesting demonstrations of the importance of attention in determining our perception of the environment occurred in a famous study conducted by Daniel Simons and Christopher Chabris (1999). In this study, participants watched a video of people dressed in black and white passing basketballs. Participants were asked to count the number of times the team dressed in white passed the ball. During the video, a person dressed in a black gorilla costume walks among the two teams. You would think that someone would notice the gorilla, right? Nearly half of the people who watched the video didn’t notice the gorilla at all, despite the fact that he was clearly visible for nine seconds. Because participants were so focused on the number of times the team dressed in white was passing the ball, they completely tuned out other visual information. Perceptual inattention is the failure to notice something that is completely visible because the person was actively attending to something else and did not pay attention to other things (Mack & Rock, 1998; Simons & Chabris, 1999).

Watch the video: The Invisible Gorilla (featuring Daniel Simons) (5 minutes)

“The Invisible Gorilla (featuring Daniel Simons) (EMMY Winner)” video by BeckmanInstitute is licensed under the Standard YouTube licence.

Change inattention

Change inattention, which used to be known as “change blindness”, is a phenomenon where significant changes in a visual scene go unnoticed by observers, especially when these changes occur amidst visual disruptions or when the observer’s focus is elsewhere. Research indicates that high rates of change inattention are present during driving simulations across different age groups, suggesting that this phenomenon affects a wide range of real-world tasks (Saryazdi, Bak, & Campos, 2019).

Further investigation into the spatial dimensions of attention reveals that the detection of unexpected objects significantly increases when they appear outside the primary focus area, challenging traditional theories of attentional focus (Kreitz, Hüttermann, & Memmert, 2020). How does our attention to space around us affect our ability to notice unexpected changes or objects in our visual field? Traditional theories of attention, such as the spotlight model, suggest that our attention works like a spotlight that highlights a specific area of our visual field. Anything within this spotlight is processed in detail, while information outside of it receives less attention or might be ignored.

However, research by Kreitz, Hüttermann, & Memmert (2020) challenges this view by showing that people are more likely to detect unexpected objects when these objects appear outside of their primary focus area — outside the spotlight, contrary to what traditional theories would predict. This suggests that our attentional focus is not just a simple spotlight on a fixed area. Instead, it might be more flexible or distributed in a way that allows for the detection of significant stimuli even outside of the central area of focus.

This finding has implications for how we understand human attention and perception. It suggests that while we may focus our attention on a particular task or area, our perceptual system remains alert to important or novel stimuli that occur outside of this focus area. This could be an adaptive feature, allowing us to notice potentially relevant or threatening stimuli in our environment, enhancing our ability to respond to unexpected events.

Watch the video: Why You Miss Big Changes Right Before Your Eyes | NOVA | Inside NOVA:(4 minutes)

“Why You Miss Big Changes Right Before Your Eyes | NOVA | Inside NOVA:” video by NOVA PBS Official is licensed under the Standard YouTube licence.

Image Attributions

Figure SP.1. “Amazing classical piano player” by Josh Appel on Unsplash.

Figure SP.2. Outcomes of a Signal Detection Analysis by Jennifer Walinga and Charles Stangor is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.

To calculate this time, we used a reading speed of 150 words per minute and then added extra time to account for images and videos. This is just to give you a rough idea of the length of the chapter section. How long it will take you to engage with this chapter will vary greatly depending on all sorts of things (the complexity of the content, your ability to focus, etc).