Chapter 9. Cognition

Cognitive Processes That May Lead to Inaccuracy

Dinesh Ramoo

Approximate reading time: 20 minutes

Learning Objectives

By the end of this section, you will be able to:

- Discuss why cognitive biases are used

- Explain confirmation bias, how to avoid it, and its role in stereotypes

- Explain hindsight bias

- Explain functional fixedness

- Explain the framing effect

- Explain cognitive dissonance

Cognitive Biases: Efficiency Versus Accuracy

We have seen how our cognitive efforts to solve problems can make use of heuristics and algorithms. Research has shown that human thinking is subject to a number of cognitive processes; we all use them routinely, although we might not be aware that we do. While these processes provide us with a level of cognitive efficiency in terms of time and effort, they may result in problem-solving and decision-making that is flawed. In this section, we will discover a number of these processes.

Cognitive biases are errors in memory or judgment that are caused by the inappropriate use of cognitive processes. Refer to Table CO.4 for specific examples. The study of cognitive biases is important, both because it relates to the important psychological theme of accuracy versus inaccuracy in perception and because being aware of the types of errors that we may make can help us avoid them, thereby improving our decision-making skills.

| Cognitive Process | Description | Examples of Threats to Accurate Reasoning |

|---|---|---|

| Confirmation bias | The tendency to verify and confirm our existing memories rather than to challenge and disconfirm them |

Once beliefs become established, they become self-perpetuating and difficult to change, regardless of their accuracy. |

| Functional fixedness | When schemas prevent us from seeing and using information in new and nontraditional ways |

Creativity may be impaired by the overuse of traditional, expectancy-based thinking. |

| Counterfactual thinking | When we “replay” events such that they turn out differently, especially when only minor changes in the events leading up to them make a difference |

We may feel particularly bad about events that might not have occurred if only a small change had occurred before them. |

| Hindsight Bias | The tendency to reconstruct a narrative of the past that includes our ability to predict what happened |

Knowledge is reconstructive. |

| Salience | When some stimuli (e.g., those that are colourful, moving, or unexpected) grab our attention, making them more likely to be remembered |

We may base our judgments on a single salient event while we ignore hundreds of other equally informative events that we do not see. |

Confirmation bias is the tendency to verify and confirm our existing beliefs and ignore or discount information that disconfirms them. For example, one might believe that organic produce is inherently better: higher in nutrition, lower in pesticides, and so on. Even though there is no difference between white eggs and brown eggs, people often think that the latter are healthier for you. Religious people are often quick to notice inconsistencies and errors in religions other than their own while not noticing the same in the one they happen to follow. Adhering to confirmation bias would mean paying attention to information that confirms the superiority of organic produce and ignoring or not believing any accounts that suggest otherwise or believing one’s own religion is superior and true whereas every other religion is man-made. Confirmation bias is psychologically comfortable, such that we can proceed to make decisions with our views unchallenged. However, just because something “feels” right, does not necessarily make it so. Confirmation bias can make people make poor decisions because they fail to pay attention to contrary evidence.

A good example of confirmation bias is seen in people’s attention to political messaging. Jeremy Frimer, Linda Skitka, and Matt Motyl (2017) found that both liberal and conservative voters in Canada and the United States were averse to hearing about the views of their ideological opponents. Furthermore, the participants in their studies indicated that their aversion was not because they felt well-informed, but rather because they were strategically avoiding learning information that would challenge their pre-existing views. A similar phenomenon would be a study done on the Colbert Report. The Colbert Report was a comedy show created as a satire on conservative talk shows in the United States. LaMarre et al. (2009) showed segments of the show to a sample of 332 people and asked for their opinion. They found that participants who had a conservative political leaning were more likely to state that Colbert was only pretending to be joking and that he genuinely meant it when he criticized liberal policies. You may be able to think of more modern examples in our world of podcasts and online news sharing platforms. As researchers point out, confirmation bias can result in people on all sides of the political scene remaining within their ideological bubbles, avoiding dialogue with opposing views, and becoming increasingly entrenched and narrow-minded in their positions.

Avoiding confirmation bias and its effects on reasoning requires, first of all, understanding of its existence and, secondly, working to reduce its effects by actively and systematically reviewing disconfirmatory evidence (Lord, Lepper, & Preston, 1984). For example, someone who believes that vaccinations are dangerous might change their mind if they wrote down the arguments for vaccination after considering some of the evidence.

It must be evident that confirmation bias has a role to play in stereotypes, which are a set of beliefs, or schemas, about the characteristics of a group. John Darley and Paget Gross (1983) demonstrated how schemas about social class could influence memory. In their research, they gave participants a picture and some information about a Grade 4 girl named Hannah. To activate a schema about her social class, Hannah was pictured sitting in front of a nice suburban house for one-half of the participants and pictured in front of an impoverished house in an urban area for the other half. Next, the participants watched a video that showed Hannah taking an intelligence test. As the test went on, Hannah got some of the questions right and some of them wrong, but the number of correct and incorrect answers was the same in both conditions. Then, the participants were asked to remember how many questions Hannah got right and wrong. Demonstrating that stereotypes had influenced memory, the participants who thought that Hannah had come from an upper-class background remembered that she had gotten more correct answers than those who thought she was from a lower-class background. You can imagine how the stereotypes that we have against certain groups can affect our behaviour with members of those groups.

All of us, from time to time, fall prone to the feeling, “I knew it all along!” This tendency is called hindsight bias, and it refers to the narrative that is constructed about something that happened in the past that helps us make sense of the event. Hindsight bias is the brain’s tendency to rewrite one’s knowledge of history after it happens. The tendency to feel like “I knew it all along” is coupled with an inability to reconstruct the lack of knowledge that formerly existed. Thus, we overestimate our ability to predict the future because we have reconstructed an illusory past (Kahneman, 2011).

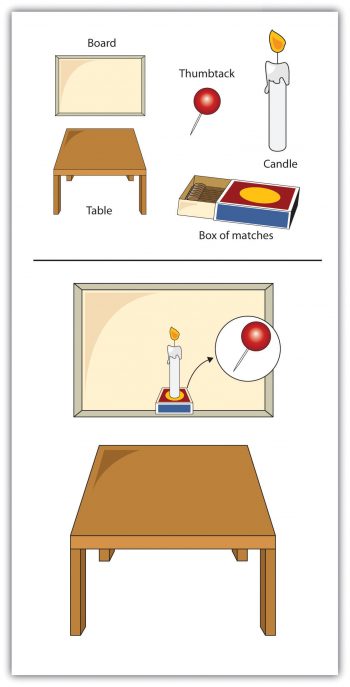

Functional fixedness occurs when people’s schemas prevent them from using an object in new and nontraditional ways. Karl Duncker (1945) gave participants a candle, a box of thumbtacks, and a book of matches; participants were then asked to attach the candle to the wall so that it did not drip onto the table underneath it (Figure CO.6). Few of the participants realised that the box could be tacked to the wall and used as a platform to hold the candle. The problem again is that our existing memories are powerful, and they bias the way we think about new information. Because the participants were fixated on the box’s normal function of holding thumbtacks, they could not see its alternative use.

Humans have a tendency to avoid risk when making decisions. The framing effect is the tendency for judgments to be affected by the framing, or wording, of the problem. When asked to choose between two alternatives, one framed in terms of avoiding loss and the second framed in maximising gain, people will make different choices about the same problem. Furthermore, emotional reactions to the same event framed differently are also different. For example, the probability of recovery from an illness is 90% is more reassuring than being told that the mortality rate of the illness is 10% (Kahneman, 2011).

Amos Tversky and Daniel Kahneman (1981) devised an example to show the framing effect:

Imagine that the United States is preparing for the outbreak of an unusual disease, which is expected to kill 600 people. Two alternative programs to combat the disease have been proposed. Assume that the exact scientific estimate of the consequences of the programs are as follows:

- If Program A is adopted, 200 people will be saved. [72%]

- If Program B is adopted, there is 1/3 probability that 600 people will be saved and 2/3 probability that no people will be saved. [28%]

Which of the two programs would you favour?

The majority of participants chose Program A as the best choice. Clearly, they favoured a sure thing over a gamble. In another version of the same problem, the options were framed differently:

- If Program C is adopted, 400 people will die. [22%]

- If Program D is adopted, there is 1/3 probability that nobody will die and 2/3 probability that 600 people will die. [78%]

Which of the two programs would you favour?

This time, the majority of participants chose the uncertain response of Program D as the best choice, but look closely, and you will note that the consequences of Programs A and C are identical, as are the consequences of programs B and D. In the first problem, participants chose the sure gain over the riskier, though potentially more lifesaving, option. In the second problem, the opposite choice was made; this time participants favoured the risky option of 600 people dying over the sure thing that 400 would die. In other words, choices involving potential losses are risky, while choices involving gains are risk averse. The important message here is that it is only the framing of the problem that causes people to shift from risk aversion to risk accepting; the problems are identical. As Daniel Kahneman (2011) pointed out, even trained medical and policy professionals reason using the framing effects above, demonstrating that education and experience may not be powerful enough to overcome these cognitive tendencies.

All of us are prone to thinking about past events and imagine that they may have turned out differently. If we can easily imagine an outcome that is better than what actually happened, then we may experience sadness and disappointment; on the other hand, if we can easily imagine that a result might have been worse than what actually happened, we may be more likely to experience happiness and satisfaction. The tendency to think about and experience events according to “what might have been” is known as counterfactual thinking (Kahneman & Miller, 1986; Roese, 2005).

Imagine, for instance, that you were participating in an important contest, and you finished in second place, winning the silver medal. How would you feel? Certainly, you would be happy that you won the silver medal, but wouldn’t you also be thinking about what might have happened if you had been just a little bit better — you might have won the gold medal! On the other hand, how might you feel if you won the bronze medal for third place? If you were thinking about the counterfactuals — that is, the “what might have beens” — perhaps the idea of not getting any medal at all would have been highly accessible; you’d be happy that you got the medal that you did get, rather than coming in fourth.

Victoria Medvec, Scott Madey, and Thomas Gilovich (1995) investigated this idea by videotaping the responses of athletes who won medals in the 1992 Summer Olympic Games (Figure CO.7). They videotaped the athletes both as they learned that they had won a silver or a bronze medal and again as they were awarded the medal. Then, the researchers showed these videos, without any sound, to raters who did not know which medal which athlete had won. The raters were asked to indicate how they thought the athlete was feeling, using a range of feelings from “agony” to “ecstasy.” The results showed that the bronze medalists were, on average, rated as happier than were the silver medalists. In a follow-up study, raters watched interviews with many of these same athletes as they talked about their performance. The raters indicated what we would expect on the basis of counterfactual thinking — the silver medalists talked about their disappointments in having finished second rather than first, whereas the bronze medalists focused on how happy they were to have finished third rather than fourth.

You might have experienced counterfactual thinking in other situations. If you were driving across the country and your car was having some engine trouble, you might feel an increased desire to make it home as you approached the end of your journey; you would have been extremely disappointed if the car broke down only a short distance from your home. Perhaps you have noticed that once you get close to finishing something, you feel like you really need to get it done. Counterfactual thinking has even been observed in juries. Jurors who were asked to award monetary damages to others who had been in an accident offered them substantially more in compensation if they barely avoided injury than they offered if the accident seemed inevitable (Miller, Turnbull, & McFarland, 1988).

Psychology in Everyday Life: Cognitive Dissonance and Behviour

One of the most important findings in social-cognitive psychology research was made by Leon Festinger more than 60 years ago. Festinger (1957) found that holding two contradictory attitudes or beliefs at the same time, or acting in a way that contradicts a pre-existing attitude, created a state of cognitive dissonance, which is a feeling of discomfort or tension that people actively try to reduce. The reduction in dissonance could be achieved by either changing the behaviour or by changing what is believed. For example, a person who smokes cigarettes while at the same time believing in their harmful effects is experiencing incongruence between actions and behaviour; this creates cognitive dissonance. The person can reduce the dissonance by either changing their behaviour (e.g., giving up smoking) or by changing their belief (e.g., convincing themselves that smoking isn’t that bad, rationalising that lots of people smoke without harmful effect, or denying the evidence against smoking). Another example could be a parent who spanks their child while also expressing a belief that spanking is wrong. The dissonance created here can similarly be reduced by either changing their behaviour (e.g., quitting spanking) or by changing their belief (e.g., rationalising that the spanking was a one-off situation, adopting the view that spanking was occasionally justified, or rationalising that many adults were spanked as children).

Cognitive dissonance is both cognitive and social because it involves thinking, and sometimes, social behaviours. Festinger (1957) wanted to see how believers in a doomsday cult would react when told the end of the world was coming, and later, when it failed to happen. By infiltrating a genuine cult, Festinger was able to extend his research into the real world. The cult members devoutly believed that the end was coming but that they would be saved by an alien spaceship, as told to them in a prophecy by the cult leader. Accordingly, they gave away their possessions, quit their jobs, and waited for rescue. When the prophecised end and rescue failed to materialize, one might think that the cult members experienced an enormous amount of dissonance; their strong beliefs, backed up by their behaviour, was incongruent with the rescue and end that failed to materialize. However, instead of reducing their dissonance by ceasing to believe that the end of the world was nigh, the cult members actually increased their faith by altering it to include a view that the world has in fact been saved by the demonstration of their faith. They became even more evangelical.

You might be wondering how cognitive dissonance operates when the inconsistency is between two attitudes or beliefs. For example, suppose the family wage-earner is a staunch supporter of the Green Party, believes in the science of climate change, and is generally aware of environmental issues. At the same time, the family wage-earner loses their job. They send out many job applications, but nothing materializes until there is an offer of full-time employment from a large petroleum company to manage the town’s gas station. Here, we would see one’s environmental beliefs pitted against the need for a job at a gas station: cognitive dissonance in the making. How would you reduce your cognitive dissonance in this situation? Take the job and rationalise that environmental beliefs can be set aside this time, that one gas station is not so bad, or that there are no other jobs? Turn down the job knowing your family needs the money but retaining your environmental beliefs?

Image Attributions

Figure CO.6. Figure 8.18 as found in Introduction to Psychology is shared under a CC BY-NC-SA 4.0 license and was authored, remixed, and/or curated by Jorden A. Cummings via source content that was edited to the style and standards of the LibreTexts platform. [Source chapter: 8.4: Accuracy and Inaccuracy in Memory and Cognition]

Figure CO.7. 2010 Winter Olympic Men’s Snowboard Cross medalists by Laurie Kinniburgh is used under a CC BY 2.0 license.

To calculate this time, we used a reading speed of 150 words per minute and then added extra time to account for images and videos. This is just to give you a rough idea of the length of the chapter section. How long it will take you to engage with this chapter will vary greatly depending on all sorts of things (the complexity of the content, your ability to focus, etc).